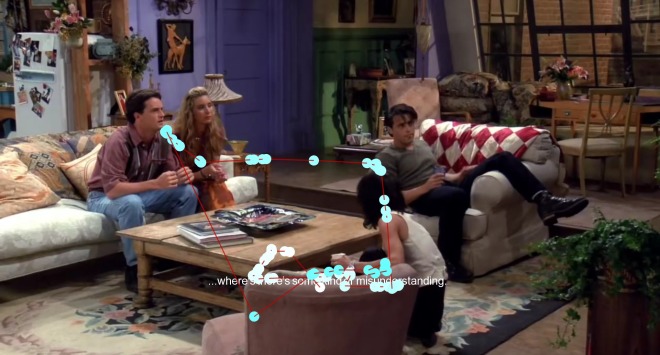

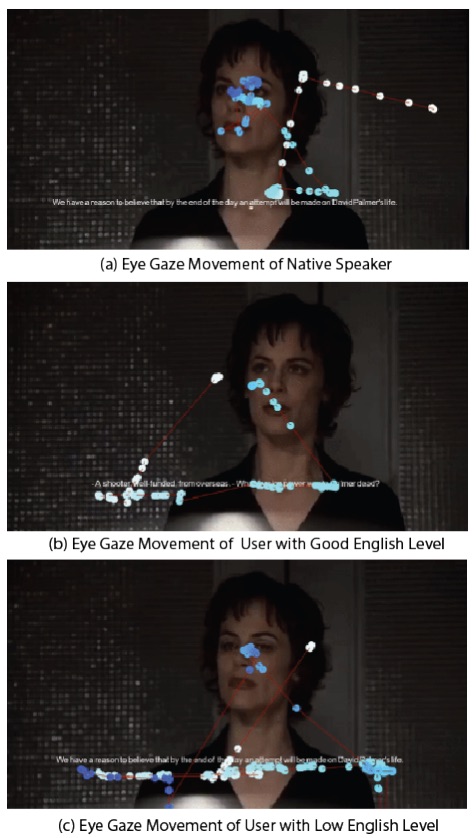

Owing to the improvement in accuracy of eye tracking devices, eye gaze movements occurring while conducting tasks are now a part of physical activities that can be monitored just like other life-logging data. Analyzing eye gaze movement data to predict reading comprehension has been widely explored and researchers have proven the potential of utilizing computers to estimate the skills and expertise level of users in various categories, including language skills. However, though many researchers have worked specifically on written texts to improve the reading skills of users, little research has been conducted to analyze eye gaze movements in correlation to watching movies, a medium which is known to be a popular and successful method of studying English as it includes reading, listening, and even speaking, the later of which is attributed to language shadowing. In this research, we focus on movies with subtitles due to the fact that they are very useful in order to grasp what is occurring on screen, and therefore, overall understanding of the content. We realized that the viewers’ eye gaze movements are distinct depending on their English level. After retrieving the viewers’ eye gaze movement data, we implemented a machine learning algorithm to detect their English levels and created a smart subtitle system called SubMe. The goal of this research is to estimate English levels through tracking eye movement. This was conducted by allowing the users to view a movie with subtitles. Our aim is create a system that can give the user certain feedback that can help improve their English studying methods.

Reference:

SubMe: An Interactive Subtitle System with English Skill Estimation Using Eye Tracking, Katsuya Fujii and Jun Rekimoto. Augmented Human 2019. ACM DL / PDF