Get connected:

Facebook / LinkedIn

adiyan<at>acm.org

Adiyan Mujibiya, PhD Student

Jun Rekimoto Laboratory

Applied Computer Science Course

Graduate School of Interdisciplinary Information Studies

University of Tokyo

Research Scientist, Rakuten Institute of Technology

I am currently on a full-time appointment with Rakuten Institute of Technology, a think-tank organization within Rakuten Inc.

I overlook Rakuten’s initiatives in mobile innovation, next-gen e-commerce, transaction, as well as efforts on Human-Computer Interaction, Ubiquitous Computing, and Interaction Design (UI/UX).

Recent Projects:

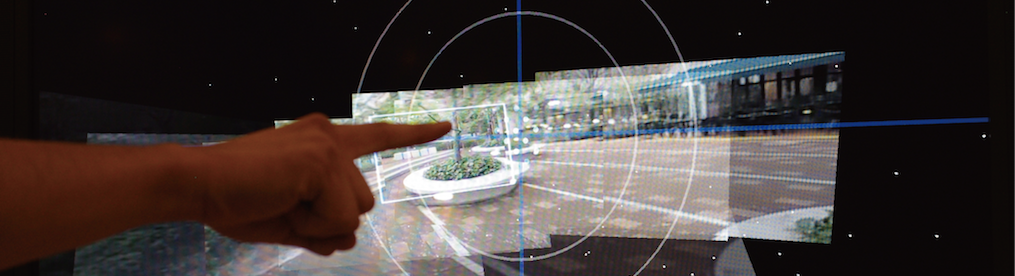

Mirage: Exploring Interaction Modalities Using Off-Body Static Electric Field Sensing

The Sound of Touch: On-body Touch and Gesture Sensing Based on Transdermal Ultrasound Propagation

Research Brief

Electronic devices with significant computational resources can now be carried mobile and dramatically decrease in size. If we take a close look at these devices, there are still significant area proportions that are dedicated for user input and output; namely the physical keyboard and pointing devices, as well as widely used touch screen. Arguably, we have not yet figured a good general solution to simultaneously reduce user interface areas while still offering full usability and functionality. To tackle current device’s physical constraints, off-the-device solutions should be explored. These alternatives include leveraging user’s body itself as an input area, as well as detection of body motion, gesture, and activity using parasitic or environmental sensing.

My research general vision propose Metric Bits concept; interactivity in arbitrary surfaces, space between surfaces, objects, and specially, the human body. The primary objective of this research is to explore novel sensing method for gesture and activity recognition, to allow more integrated solution in Human-Computer Interaction (HCI). By augmenting the human body with sensors for computationally enhancements, we will eventually shift the paradigm of Human-Computer Interaction to Human-Computer Integration.

The idea is to combine intuitive human sense and sensor data. One concrete example is proprioception; e.g. human ability to touch their body parts without the sense of sight. Another example is human ability to touch type, i.e. typing without using the sense of sight to find keys. These are obvious hints for an open research field in intuitive user interface design.

I have also begun a new thread of research, namely Human Wire concept, which focused on leveraging human body (or any biological tissue) capabilities of propagating and interfering signals (both acoustic and electromagnetic), as well as explorations on Electric Field Sensing.

Pingback: 2010 MSR Asia Fellowship Winnerのご紹介(その2) « University Relations in Japan, Microsoft Research Asia